Posted inKeras Python modules

Implementing Attention Mechanisms in Keras Models

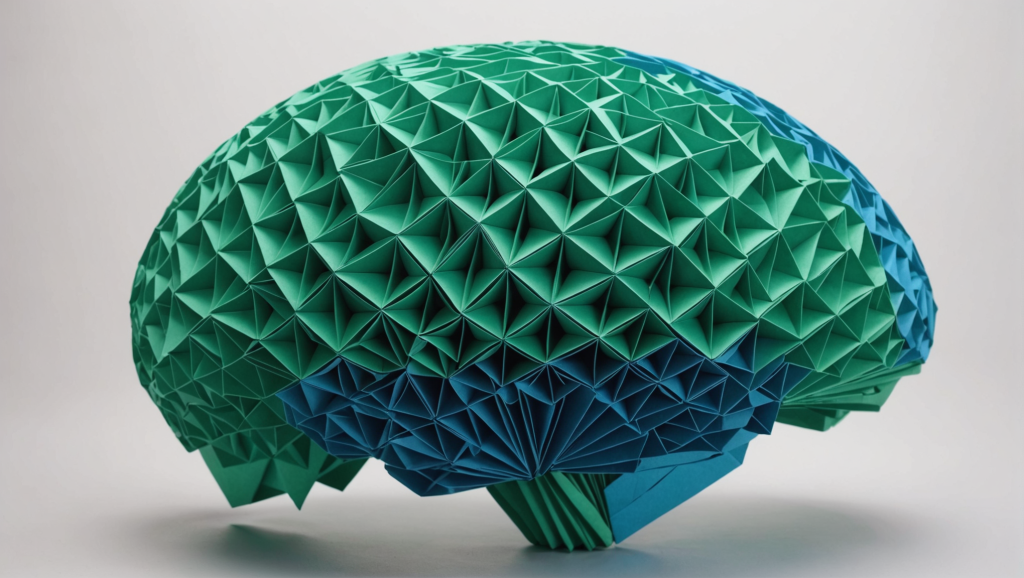

Transform deep learning with attention mechanisms in Keras. Enhance model performance in natural language processing by dynamically focusing on input relevance. Explore self-attention and context vectors to improve interpretability and capture long-range dependencies effortlessly within your architectures.